Visual voice activity detection as a help for speech source separation from convolutive mixtures

US$34.93

10000 in stock

SupportDescription

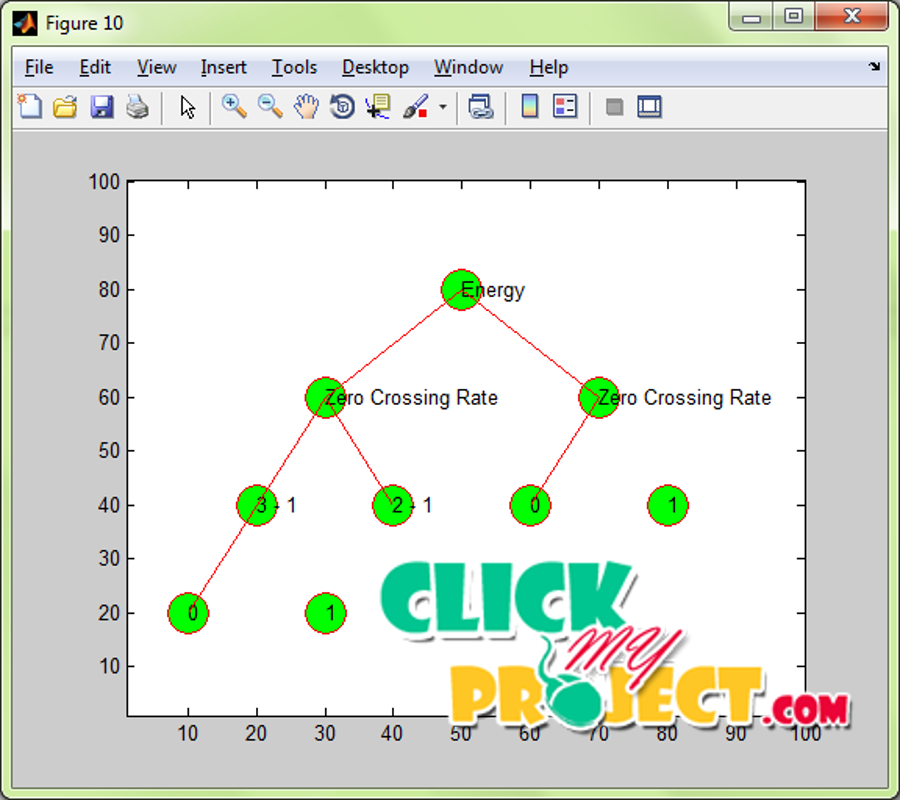

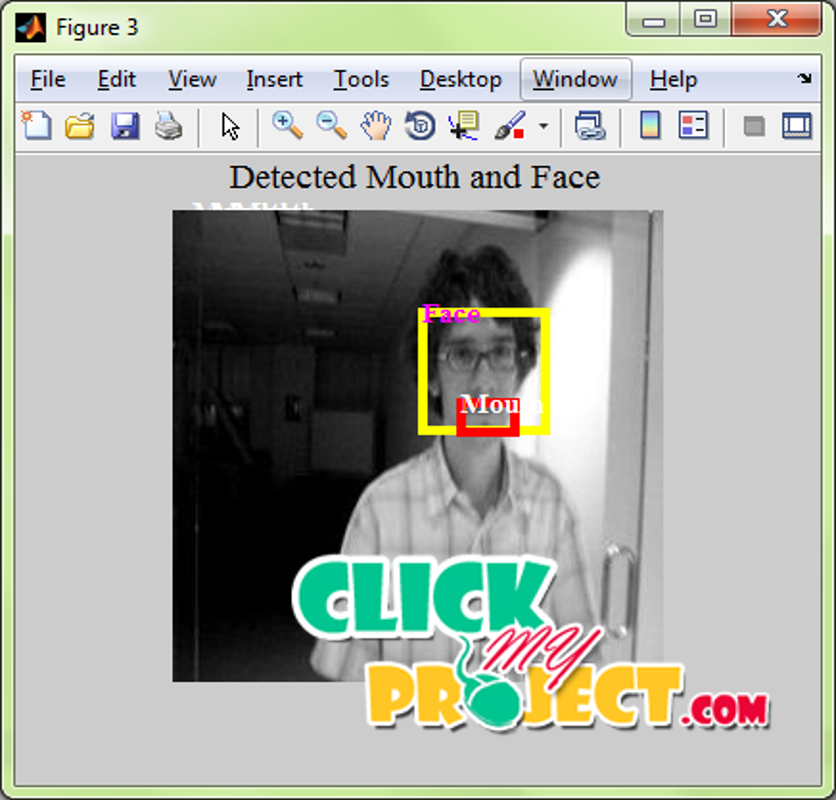

Videos contains both audio signals and images. The separation of the frames and audios can be useful in many process. The speech detection can be done on videos by analysing the audio signals from it. The analysis of the audio signals can be done in many ways such as finding the amplitude and detecting the maximum peaks in the signals. The analysis of the signals can be done by using feature based approach. The feature based approaches can be more reliable for the analysis of the signals since they are not affected by any other disturbances. The process of identification of the speech in the video is done by employing the feature extraction method. The feature extraction methods calculated values from the voice signal to detect the voice activity in the frames. The frames at which the speech is detected is identified. The difference between the feature values extracted from the frames. The energy and the zero crossing rate is obtained as features. The difference between the energy of two frames will be zero if there is no speech detected. The video frames at which speech is occurred can be identified and based on that classification can be done. The input video is loaded. Loading input video refers to the identification of number of frames in the video, size of the video frame (i.e.) height and width of the frames. The video is converted into frames and voice signals. The voice signals represents the amplitude or value of the speech signal. The face and mouth were detected from the frames based on vialo jones detection method. The face and the mouth detection gives the initial point and the length and breadth of the face and mouth in the image. The features were extracted from the voice signals. Energy and Zero crossing rate were extracted as features. The energy and the zero crossing rate were more reliable features for audio systems. The difference between the extracted features from each frames were calculated. The difference between the frames that are having voice activity will be one and the difference between the frames that are not having voice activity will be zero. A binary decision tree produces the conclusion whether the frame has speech or non speech.