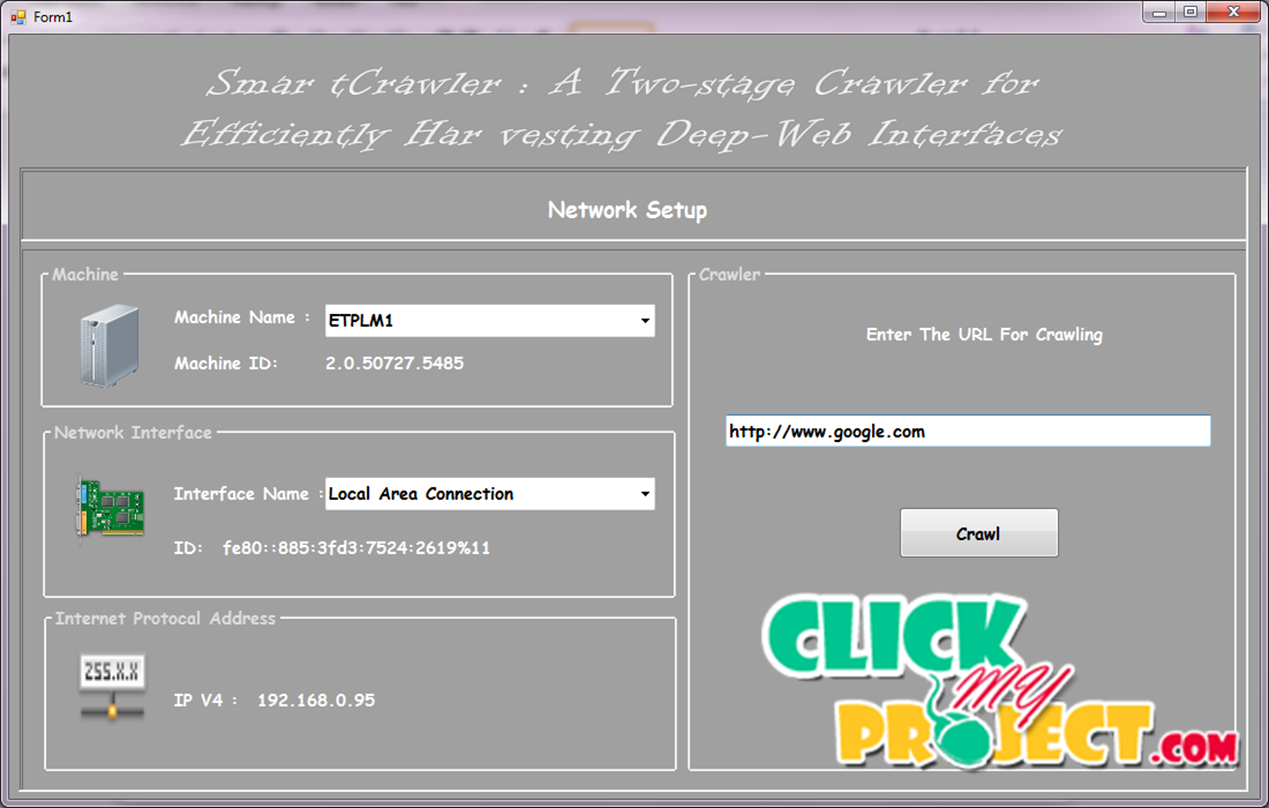

SmartCrawler A Two stage Crawler for Efficiently Harvesting Deep Web Interfaces

₹3,500.00

10000 in stock

SupportDescription

Search engines are the major breakthrough on the web for retrieving the information. But List of retrieved documents contains a high percentage of duplicated and near document result. So there is the need to improve the performance of search results. Some of current search engine use data filtering algorithm which can eliminate duplicate and near duplicate documents to save the users’ time and effort. The identification of similar or near-duplicate pairs in a large collection is a significant problem with wide-spread applications. Presence of duplicate documents in the World Wide Web adversely affects crawling, indexing and relevance, which are the core building blocks of web search. The system present a set of techniques to mine rules from URLs and utilize these learnt rules for de-duplication using just URL strings without fetching the content explicitly. In the existing system the system used a very simple alignment heuristic to deal with irrelevant components. It is not publicly available and was not described with enough detail to be implemented. In this project the system propose a use of multiple alignment as a way to avoid the problems of simple pairwise rule extraction. The system proposed DUSTER. It can use it to find and validate rules, by splitting it in training and validating sets. DUSTER learns normalization rules that are very precise in converting distinct URLs which refer the same content to a common canonical form, making it easy to detect them. As deep web grows at a very fast pace, there has been increased interest in techniques that help efficiently locate deep-web interfaces. However, due to the large volume of web resources and the dynamic nature of deep web, achieving wide coverage and high efficiency is a challenging issue. We propose a two-stage framework, namely Smar tCrawler, for efficient harvesting deep web interfaces. In the first stage, Smar tCrawler performs site-based searching for center pages with the help of search engines, avoiding visiting a large number of pages. To achieve more accurate results for a focused crawl, Smar tCrawler ranks websites to prioritize highly relevant ones for a given topic. In the second stage, Smart Crawler achieves fast in-site searching by excavating most relevant links with an adaptive link-ranking. To eliminate bias on visiting some highly relevant links in hidden web directories, we design a link tree data structure to achieve wider coverage for a website. Our experimental results on a set of representative domains show the agility and accuracy of our proposed crawler framework, which efficiently retrieves deep-web interfaces from large-scale sites and achieves higher harvest rates than other crawlers.