Learning-Based Superresolution Land Cover Mapping

US$51.90

10000 in stock

SupportDescription

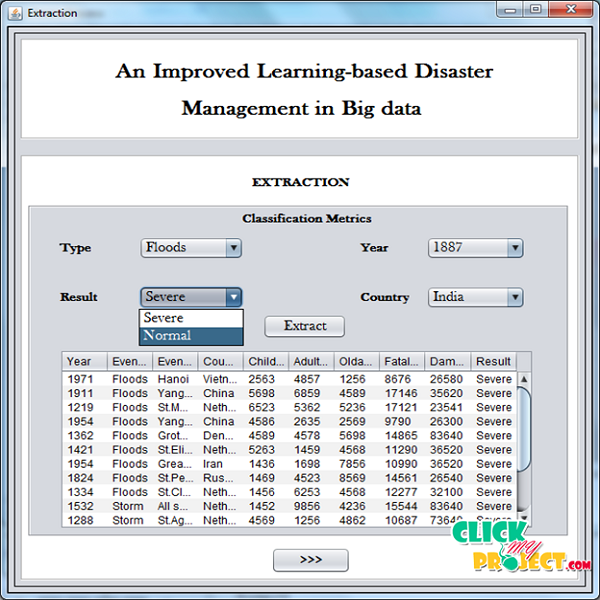

Now-a day’s Map Reduce programming model is focused since it is suitable for distributed large scale data processing with some constraints and properties. Over the last few years, organizations across public and private sectors have made a strategic decision to turn big data into competitive advantage. The challenge of extracting value from big data is similar in many ways to the age-old problem of distilling business intelligence from transactional data. At the heart of this challenge is the process used to extract data from multiple sources, transform it to fit your analytical needs, and load it into a data warehouse for subsequent analysis, a process known as “Extract, Transform & Load” (ETL). The Hadoop Distributed File System (HDFS) is the storage component of Hadoop. It is designed to reliably store very large data sets on clusters, and to stream those data at high throughput to user applications. The nature of big data requires that the infrastructure for this process can scale cost-effectively. Hadoop has emerged as the de facto standard for managing big data. This whitepaper examines some of the platform hardware and software considerations in using Hadoop for ETL. The results of an experimental analysis using a SVM classifier on data sets of different sizes for different cluster configurations demonstrates the potential of the tool, as well as aspects that affect its performance. The results shows that the proposed approach tackle the practical problems in the context of big data.